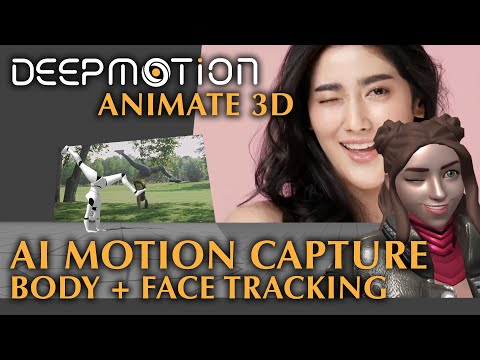

DeepMotion’s AI Motion Capture Solution Adds Markerless Face Tracking

The team at DeepMotion further advanced their mission to revolutionize motion capture technology by introducing markerless Face Tracking. The AI-powered motion capture solution is now more complete with the ability to capture full-body motion now with facial expressions – all from a single video. Anyone can try it completely free here.

Adding face capture to Animate 3D gives users more control over expressing their vision by quickly and easily generating 3D face animations in minutes. No special hardware is needed allowing any video captured on any device to be used to generate 3D face animations.

The new face tracking feature is the latest in a continuous and aggressive release schedule that brings new features every few weeks to the service. Some of the other recent features include half-body tracking as well as various foot locking modes that enable users to capture motions like swimming and acrobatics “They’ve been killing it with the updates” says Virtual YouTuber Fruitpex in their recent coverage of top motion capture solutions.

The new face tracking AI captures facial features including blinking, expressive mouth motions, eyebrows and head positions with markerless tracking, no dots necessary. To complement this new feature, they recently launched half-body tracking and tight headshots which further enables tracking of the irises and higher fidelity features of the face. Users can also stick with full-body tracking that will still provide general expressions of character’s faces despite it being further away.

They recommend trying the new face motion capture with their Custom Characters feature to get a sense of what it can look like on a real character. Users can customize their own avatars with the built-in Wolf 3D metaverse avatar creator Ready Player Me, seen recently in other applications like VR Chat. Users can also upload their own unique character directly to the service, however will need to make sure it is set up with the standard ARKit blendshapes for it to work correctly. Alternatively, users can always select the Default character option that will generate the animation on a default character which one can then import into the 3D modeling or animation software of their choice to retarget onto their own character.

All users now have access to this feature, available in the Animation Settings when creating a new animation. The animation download will include the full-body motion data plus facial BlendShape weights based on ARKit Blendshapes. You can check out their FAQ to learn more about how to use Animate 3D Face Tracking for your projects.

ABOUT DEEPMOTION

Since its inception in 2014, DeepMotion has been on a mission to bring digital characters to life through smarter motion technology. Using physics simulation, computer vision, and machine learning, DeepMotion’s solutions bridge physical and digital motion for virtual characters and machines.