Understanding Risk, Artificial Intelligence, and Improving Software Quality

The software discipline has broad involvement across each of the NASA Mission Directorates. Some recent discipline focus and development areas are highlighted below, along with a look at the Software Technical Discipline Team’s (TDT) approach to evolving discipline best practices toward the future.

Understanding Automation Risk

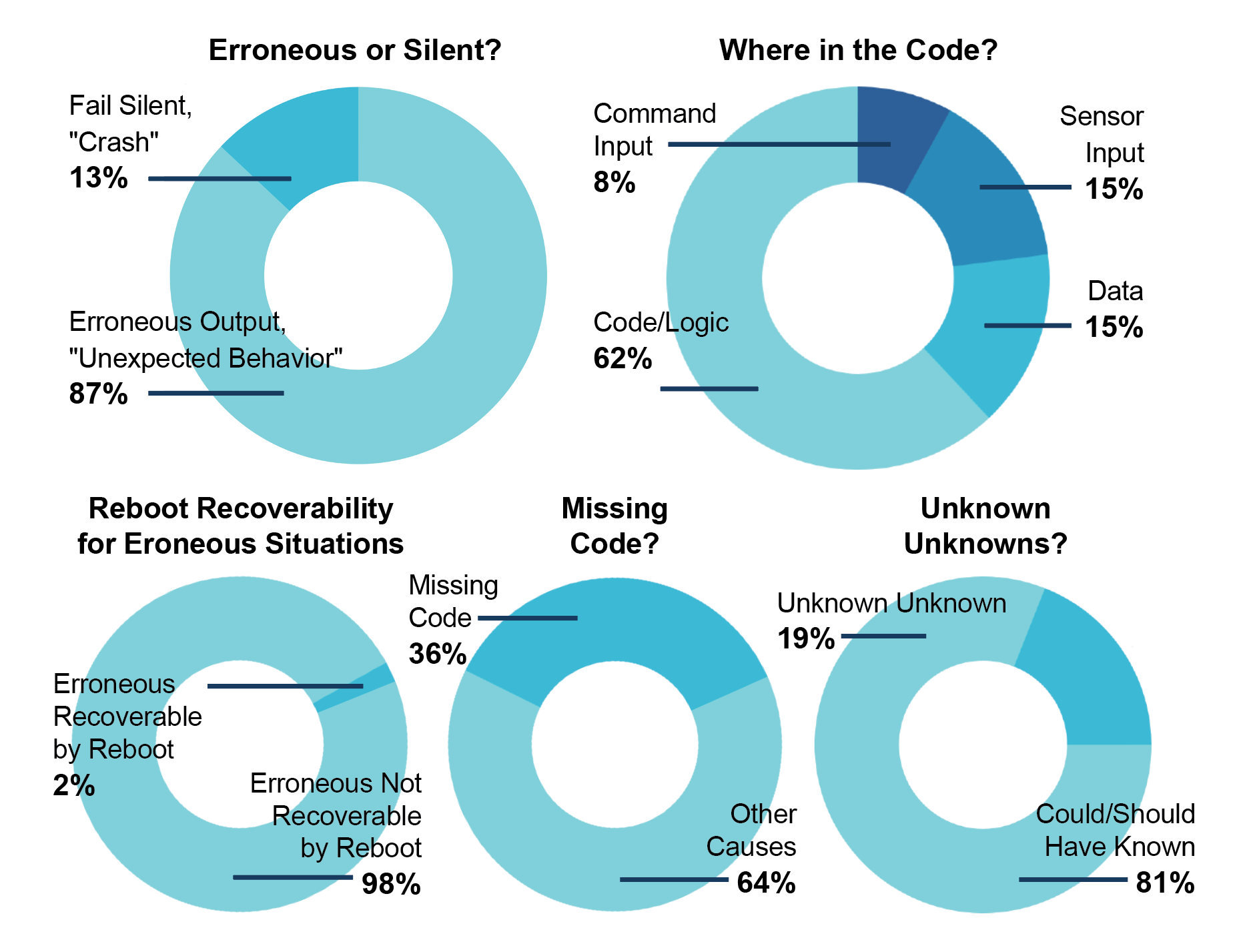

Software creates automation. Reliance on that automation is increasing the amount of software in NASA programs. This year, the software team examined historical software incidents in aerospace to characterize how, why, and where software or automation is mostly likely to fail. The goal is to better engineer software to minimize the risk of errors, improve software processes, and better architect software for resilience to errors (or improve fault-tolerance should errors occur).

Some key findings shown in the above charts, indicate that software more often does the wrong thing rather than just crash. Rebooting was found to be ineffective when software behaves erroneously. Unexpected behavior was mostly attributed to the code or logic itself, and about half of those instances were the result of missing software—software not present due to unanticipated situations or missing requirements. This may indicate that even fully tested software is exposed to this significant class of error. Data misconfiguration was a sizeable factor that continues to grow with the advent of more modern data-driven systems. A final subjective category assessed was “unknown unknowns”—things that could not have been reasonably anticipated. These accounted for 19% of software incidents studied.

The software team is using and sharing these findings to improve best practices. More emphasis is being placed on the importance of complete requirements, off-nominal test campaigns, and “test as you fly” using real hardware in the loop. When designing systems for fault tolerance, more consideration should be given to detecting and correcting for erroneous behavior versus just checking for a crash. Less confidence should be placed on rebooting as an effective recovery strategy. Backup strategies for automations should be employed for critical applications—considering the historic prevalence of absent software and unknown unknowns. More information can be found in NASA/TP-20230012154, Software Error Incident Categorizations in Aerospace.

Employing AI and Machine Learning Techniques

The rise of artificial intelligence (AI) and machine learning (ML) techniques has allowed NASA to examine data in new ways that were not previously possible. While NASA has been employing autonomy since its inception, AI/ML techniques provide teams the ability to expand the use of autonomy outside of previous bounds. The Agency has been working on AI ethics frameworks and examining standards, procedures, and practices, taking security implications into account. While AI/ML generally uses nondeterministic statistical algorithms that currently limit its use in safety-critical flight applications, it is used by NASA in more than 400 AI/ML projects aiding research and science. The Agency also uses AI/ML Communities of Practice for sharing knowledge across the centers. The TDT surveyed AI/ML work across the Agency and summarized it for trends and lessons.

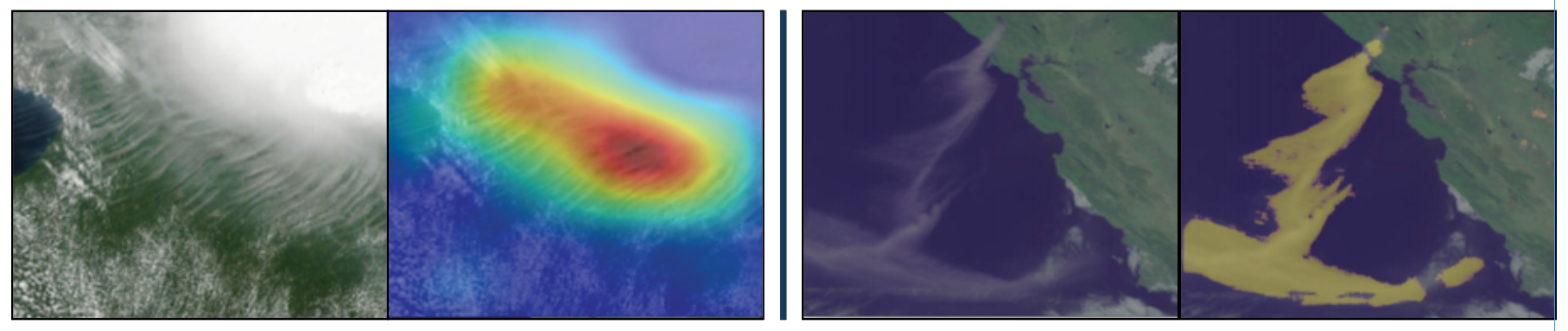

Common usages of AI/ML include image recognition and identification. NASA Earth science missions use AI/ML to identify marine debris, measure cloud thickness, and identify wildfire smoke (examples are shown in the satellite images below). This reduces the workload on personnel. There are many applications of AI/ML being used to predict atmospheric physics. One example is hurricane track and intensity prediction. Another example is predicting planetary boundary layer thickness and comparing it against measurements, and those predictions are being fused with live data to improve the performance over previous boundary layer models.

Examples of how NASA uses AI/ML. Satellite images of clouds with estimation of cloud thickness (left) and wildfire detection (right).

NASA-HDBK-2203, NASA Software Engineering and Assurance Handbook (https://swehb.nasa.gov)

The Code Analysis Pipeline: Static Analysis Tool for IV&V and Software Quality Improvement

The Code Analysis Pipeline (CAP) is an open-source tool architecture that supports software development and assurance activities, improving overall software quality. The Independent Verification and Validation (IV&V) Program is using CAP to support software assurance on the Human Landing System, Gateway, Exploration Ground Systems, Orion, and Roman. CAP supports the configuration and automated execution of multiple static code analysis tools to identify potential code defects, generate code metrics that indicate potential areas of quality concern (e.g., cyclomatic complexity), and execute any other tool that analyzes or processes source code. The TDT is focused on integrating Modified Condition/Decision Coverage analysis support for coverage testing. Results from tools are consolidated into a central database and presented in context through a user interface that supports review, query, reporting, and analysis of results as the code matures.

The tool architecture is based on an industry standard DevOps approach for continuous building of source code and running of tools. CAP integrates with GitHub for source code control, uses Jenkins to support automation of analysis builds, and leverages Docker to create standard and custom build environments that support unique mission needs and use cases.

Improving Software Process & Sharing Best Practices

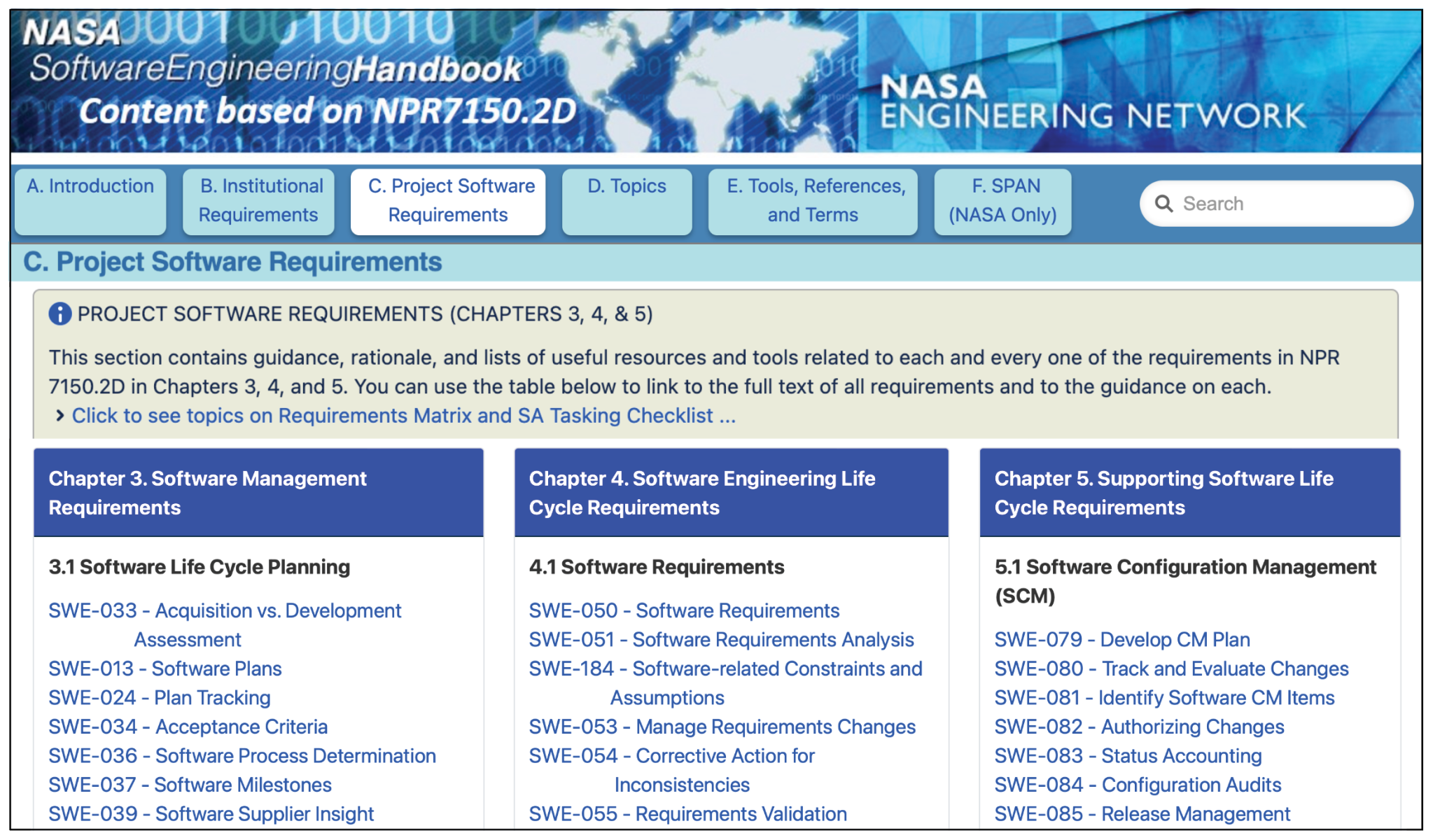

The TDT has captured the best practice knowledge from across the centers in NPR 7150.2, NASA Software Engineering Requirements, and NASA-HDBK-2203, NASA Software Engineering and Assurance Handbook (https://swehb.nasa.gov.) Two APPEL training classes have been developed and shared with several organizations to give them the foundations in the NPR and software engineering management. The TDT established several subteams to help programs/projects as they tackle software architecture, project management, requirements, cybersecurity, testing and verification, and programmable logic controllers. Many of these teams have developed guidance and best practices, which are documented in NASA-HDBK-2203 and on the NASA Engineering Network.

NPR 7150.2 and the handbook outline best practices over the full lifecycle for all NASA software. This includes requirements development, architecture, design, implementation, and verification. Also covered, and equally important, are the supporting activities/functions that improve quality, including software assurance, safety configuration management, reuse, and software acquisition. Rationale and guidance for the requirements are addressed in the handbook that is internally and externally accessible and regularly updated as new information, tools, and techniques are found and used.

The Software TDT deputies train software engineers, systems engineers, chief engineers, and project managers on the NPR requirements and their role in ensuring these requirements are implemented across NASA centers. Additionally, the TDT deputies train software technical leads on many of the advanced management aspects of a software engineering effort, including planning, cost estimating, negotiating, and handling change management.